Innovations In Sensor Technology

Sensors are the “eyes” and “ears” of processors, co-processors, and computing modules. They come in all shapes, forms, and functions, and they are being deployed in a rapidly growing number of applications — from edge computing and IoT, to smart cities, smart manufacturing, hospitals, industrial, machine learning, and automotive.

Each of these use cases relies on chips to capture data about what is happening in our analog world, and then digitize the data so it can be processed, stored, combined, mined, correlated, and utilized by both humans and machines.

“In the new era of edge processing across many different application spaces, we see multiple trends in the development of next-generation sensor technology,” said Rich Collins, director of product marketing at Synopsys. “Sensor implementations are moving beyond the basic need to capture and interpret a combination of environmental conditions, such as temperature, motion, humidity, proximity, and pressure, in an efficient way.”

Energy and power efficiency are critical for these applications. Many rely on batteries, share resources between different sensors, and they often include some type of always-on circuitry for faster boot-up or to detect motion, gestures, or specific keywords. In the past these types of functions typically were built into the central processor, but that approach is wasteful from an energy perspective.

“In a variety of different use cases in the automotive, mobile and IoT markets, developing a flexible system optimized with dedicated processors, as well as hardware accelerators offloading the host processor, seems to be emerging as a basic requirement,” Collins said. “Communication of sensor data also is becoming an essential feature for many of these implementations. And since communication tasks are often periodic in nature, we see the same processing elements leveraged for the sensor data capture, fusion processing, and communication tasks, enabling more power-efficient use of processing resources.”

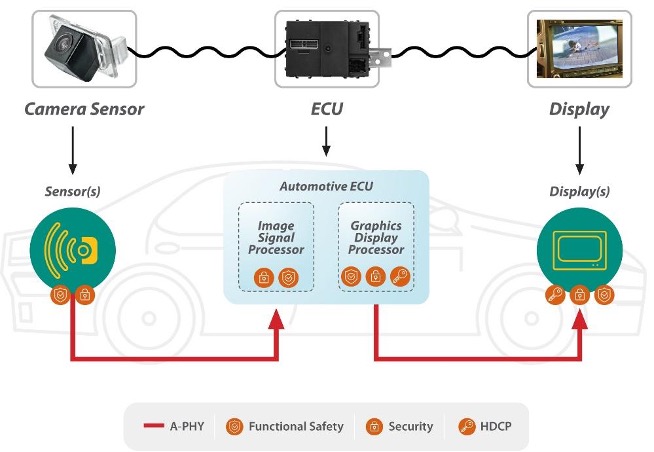

This is especially true for the automotive industry, which is emerging as the poster child for sensor technology as it undergoes a transformation from mechanical and analog to electrical and digital. A new car’s engine control unit (ECU) now controls everything from acceleration and deceleration to monitoring the vehicle inside and out. Advanced driver assistance systems (ADAS) rely on different types of sensors mounted on the vehicle to gather the data the ECU requires for making decisions.

That will become more critical as vehicles add increasing levels of autonomy over the next decade or so. Sensors will play important roles in meeting safety, security, and convenience objectives.

Data in motion

On the automotive front, advancing from SAE Level IV (semi-autonomous) to Level V (fully autonomous) will depend on a process in which individual smart ECUs use sensor-collected information to make decisions. That process has three major steps:

Sensor electronics must accurately turn analog signals into digital data bits.

Each ECU must be able to interpret that data to make the appropriate decisions, such as when to accelerate or decelerate.

At the fully autonomous driving stage, machine learning will be applied, enabling self-driving vehicles to navigate in various environments.

In effect, the vehicle needs to “see” or “sense” its surroundings to stay on the road and to avoid accidents. Today, these signals come from light, electromagnetic waves, infrared (IR), and ultrasound, and must be converted to digital data bits.

A vehicle uses a combination of sensors — for example, lidar, radar, and/or HD cameras — to detect objects, including other moving vehicles, and determine their distance and speed.

Automakers have not yet standardized on what sensors are best for autonomous driving. For example, Tesla is taking a vision-only approach for some of its newer autonomous models by combining AI supercomputers and multiple HD cameras. One such supercomputer consists of 5,760 GPUs capable of 1.8 exaFLOPS (EFLOPS) with a connection speed of 1.6 terabytes per second (TBps). The storage has 10 petabytes capacity. Essentially, Tesla’s aim is to use machine learning to simulate human driving, an approach that requires intensive training and learning.

Each of these use cases relies on chips to capture data about what is happening in our analog world, and then digitize the data so it can be processed, stored, combined, mined, correlated, and utilized by both humans and machines.

“In the new era of edge processing across many different application spaces, we see multiple trends in the development of next-generation sensor technology,” said Rich Collins, director of product marketing at Synopsys. “Sensor implementations are moving beyond the basic need to capture and interpret a combination of environmental conditions, such as temperature, motion, humidity, proximity, and pressure, in an efficient way.”

Energy and power efficiency are critical for these applications. Many rely on batteries, share resources between different sensors, and they often include some type of always-on circuitry for faster boot-up or to detect motion, gestures, or specific keywords. In the past these types of functions typically were built into the central processor, but that approach is wasteful from an energy perspective.

“In a variety of different use cases in the automotive, mobile and IoT markets, developing a flexible system optimized with dedicated processors, as well as hardware accelerators offloading the host processor, seems to be emerging as a basic requirement,” Collins said. “Communication of sensor data also is becoming an essential feature for many of these implementations. And since communication tasks are often periodic in nature, we see the same processing elements leveraged for the sensor data capture, fusion processing, and communication tasks, enabling more power-efficient use of processing resources.”

This is especially true for the automotive industry, which is emerging as the poster child for sensor technology as it undergoes a transformation from mechanical and analog to electrical and digital. A new car’s engine control unit (ECU) now controls everything from acceleration and deceleration to monitoring the vehicle inside and out. Advanced driver assistance systems (ADAS) rely on different types of sensors mounted on the vehicle to gather the data the ECU requires for making decisions.

That will become more critical as vehicles add increasing levels of autonomy over the next decade or so. Sensors will play important roles in meeting safety, security, and convenience objectives.

Data in motion

On the automotive front, advancing from SAE Level IV (semi-autonomous) to Level V (fully autonomous) will depend on a process in which individual smart ECUs use sensor-collected information to make decisions. That process has three major steps:

Sensor electronics must accurately turn analog signals into digital data bits.

Each ECU must be able to interpret that data to make the appropriate decisions, such as when to accelerate or decelerate.

At the fully autonomous driving stage, machine learning will be applied, enabling self-driving vehicles to navigate in various environments.

In effect, the vehicle needs to “see” or “sense” its surroundings to stay on the road and to avoid accidents. Today, these signals come from light, electromagnetic waves, infrared (IR), and ultrasound, and must be converted to digital data bits.

A vehicle uses a combination of sensors — for example, lidar, radar, and/or HD cameras — to detect objects, including other moving vehicles, and determine their distance and speed.

Automakers have not yet standardized on what sensors are best for autonomous driving. For example, Tesla is taking a vision-only approach for some of its newer autonomous models by combining AI supercomputers and multiple HD cameras. One such supercomputer consists of 5,760 GPUs capable of 1.8 exaFLOPS (EFLOPS) with a connection speed of 1.6 terabytes per second (TBps). The storage has 10 petabytes capacity. Essentially, Tesla’s aim is to use machine learning to simulate human driving, an approach that requires intensive training and learning.

semiengineering.com