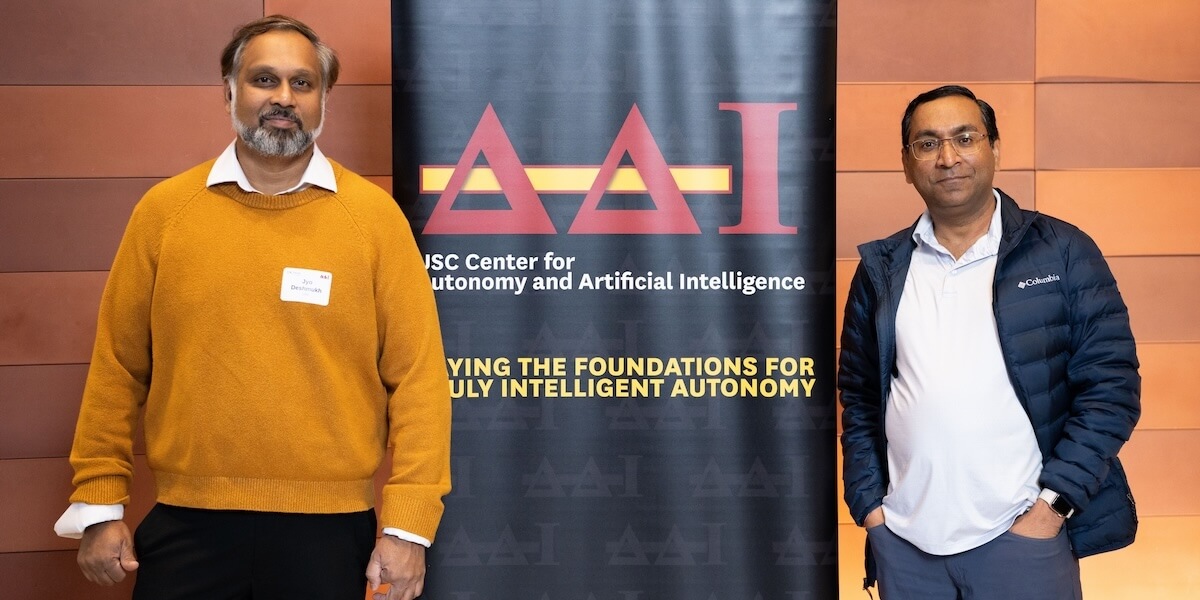

Jyotirmoy Deshmukh and Rahul Jain of the USC Center for Autonomy and AI (Photo/Braden Dawson)

For years, the promise of artificial intelligence has captivated researchers and the public alike. But when it comes to safety-critical autonomous systems — self-driving trucks, military drones, robotic assistants — AI has a big problem: overconfidence.

The USC Center for Autonomy and AI tackled this challenge head-on during its fall 2025 workshop Oct. 9 at USC’s Michelson Hall. The theme, “Is the Future of Autonomy in Foundation Models?” brought together about 70 faculty members, industry representatives and students to explore whether the technology powering ChatGPT and other AI systems could make autonomous vehicles and robots safer and smarter.

Foundation models are general-purpose AI systems trained on diverse data and useful for applications beyond where the training data came from. While these models have demonstrated almost human-like reasoning for text-based tasks, their application to safety-critical autonomous systems remains questionable.

“The foundation model methodology relies on the premise that you can build a more powerful AI model if the training data comes from a wide variety of settings and use cases. It may then generalize well even to other settings it has not seen before,” said Rahul Jain, director of the USC Center for Autonomy and AI and a USC Viterbi professor of electrical and computer engineering, computer science, and industrial and systems engineering.

Added Jain: “To solve the autonomy problem, we only use data from one domain. For example, sidewalk robotic data is not used to train self-driving cars, and vice-versa. So, the question is, if we merge the data from these two to train a single AI foundation model, can we expect it to be more powerful and also work well on warehouse robots? “

Ahmed Sadek, Head of Physical AI Systems and VP of Engineering at Qualcomm, delivering the Keynote Address (Photo/Braden Dawson)

Ahmed Sadek, head of physical AI systems and vice president of engineering at Qualcomm, delivered the keynote address. He discussed Qualcomm’s work in autonomous driving and the company’s engagement with BMW.

Faculty and Industry Perspectives

The all-day event featured 16 speakers from both academia and industry, including representatives from Ford Otosan, Toyota Research Institute, The Boeing Company, Lockheed Martin, Northrop Grumman and The Aerospace Corporation.

USC Viterbi faculty speakers included Yan Liu, professor of computer science, electrical and computer engineering, and biomedical sciences; Bistra Dilkina, Dr. Allen and Charlotte Ginsburg Early Career Chair in Computer Science and associate professor of computer science; Mahdi Soltanolkotabi, professor of electrical and computer engineering, computer science, and industrial and systems engineering; Jesse Thomason, USC assistant professor of computer science; Ketan Savla, John and Dorothy Shea Early Career in Civil Engineering and associate professor of civil and environmental engineering and electrical and computer engineering; Erdem Biyik, assistant professor of computer science and electrical and computer engineering; and Yue Wang, assistant professor of computer science.

Sean O’Brien, manager of programs for advanced autonomy at Northrop Grumman, discussing autonomy research with graduate students at the poster session (Photo/Courtesy of Maurena Nacheff-Benedict)

Industry speakers included Elizabeth Davison, principal director in the Office of the CTO at The Aerospace Corp.; Mauricio Castillo-Effen, an LM fellow for assurance at Lockheed Martin Advanced Technology Labs; and Sean O’Brien, manager of programs for advanced autonomy at Northrop Grumman.

Teaching AI to Doubt Itself

Eren Aydemir, perception technologies development lead at Ford Otosan, addressed a critical safety issue. His company is developing autonomous trucks that can drive themselves on highways while human operators control them remotely in complex urban environments.

But the AI that “sees” the road thinks it knows more than it does.

“Deep learning models tend to be overconfident, even when they’re wrong, especially in difficult scenes like poor lighting, fog or occlusion,” Aydemir explained.

His team tested two approaches. The first, called temperature scaling, adjusts the AI’s confidence levels. While helpful, Aydemir noted that “the overall gain is quite limited.”

The second approach, Monte Carlo Dropout, has proven more promising. This method makes the AI analyze the same image five times with slightly different perspectives. If the answers vary, the system recognizes uncertainty and identifies challenging scenarios. By focusing training on these difficult images, the team achieved a 3% accuracy improvement — significant when a truck encounters thousands of objects per hour.

“Uncertainty-based selection doesn’t give immediate gains, it becomes more beneficial as the data set grows, helping the model focus on challenging high-value samples,” Aydemir said.

Building AI That Works With Humans

Jonanthan DeCastro, a research scientist at Toyota Research Institute, tackled another challenge: training AI systems to collaborate with humans when data is scarce.

“When humans and AI operate together, they must operate safely and robustly,” DeCastro said. But “when you include humans, many of [the] assumptions break down.”

The problem is the lack of interactive data. Gathering real examples of humans and AI working together is expensive and time-consuming. DeCastro’s team has developed a solution: blending driving simulators, computer-generated human agents with different behaviors and recordings of real drivers.

His team’s “Dream to Assist” system tries to understand a driver’s high-level goal — whether passing a slow vehicle or staying in a lane. The system then either helps execute that plan safely or warns the driver if it’s dangerous, using steering wheel feedback, visual cues and audio alerts.

“The goal is to learn what the human’s intent actually is, their high-level intent, and to be able to leverage this information in order to execute it better,” DeCastro said.

Tests have shown that the system correctly understood driver intentions and helped them perform better and more safely. The breakthrough offers genuine collaboration that respects human decisions while maintaining safety.

Consensus and Concerns

(Left to Right) Erdem Biyik, USC Viterbi Assistant Professor of Computer Science and Electrical and Computer Engineering; Alberto Speranzon, chief scientist for autonomy at Lockheed Martin Advanced Technology Labs; Sean O’Brien,manager of programs for advanced autonomy at Northrop Grumman; and Ahmed Sadek, head of physical AI systems and vice president of engineering at Qualcomm (Photo/Courtesy of Maurena Nacheff-Benedict)

A panel discussion moderated by Biyik brought together Sadek, Alberto Speranzon — chief scientist for autonomy at Lockheed Martin Advanced Technology Labs — and Northrop Grumman’s O’Brien to discuss whether foundation models can be used for safety-critical autonomous systems.

The consensus: ChatGPT-type language models are not yet adequate for autonomous vehicles, drones and similar applications. Safety assurance remains paramount, and more research is needed.

“There is skepticism that [current] language foundation models would be adequate to solve robotics and autonomous system challenges,” Jain said.

As autonomous systems become more prevalent in daily life, the questions raised at this year’s workshop grow increasingly urgent. The future of autonomy may not lie in simply making AI smarter, but in making it honest about what it doesn’t know — and teaching it to work alongside the humans whose lives depend on it.

An Ongoing Mission

Founded in August 2021, the USC Center for Autonomy and AI bring together faculty from nearly all USC Viterbi departments. It connects academic researchers and industry leaders across sectors to solve challenges in AI and autonomy.

Led by Jain as director and Jyotirmoy Deshmukh, USC Viterbi associate professor of computer science and electrical and computer engineering, as co-director, the center focuses on emerging technologies with real-world applications.

Published on November 11th, 2025

Last updated on November 11th, 2025